As readers know, we live in the age of significant disinformation. From political campaigns in various nations to recent untrue reports of an explosion at the Pentagon, such misinformation is meant to sow distrust, confusion, and other malicious intents. Given the rise of artificial intelligence (large language models) and the ease of creating images, video, audio and more, we at Web Professionals Global have joined the Content Authenticity Initiative. [Like all links, this will open in a new browser tab.] You might have to scroll a bit as there are now over 1,000 members of this initiative (organizations and citizens).

What is CAI?

Essentially, CAI (Content Authenticity Initiative) is a community of tech companies, non-governmental organizations (like Web Professionals Global), academics and others working to “promote adoption of an open industry standard for content authenticity and provenance.” What is the source of the image, video, audio in question? How was it created? If something has been tampered with, this is tracked and evident when examining the meta data. If you are not a member, we encourage you to join (either as an individual or a company). We are all about community (it is the first item in our tag line). We also believe strongly in copy rights as well as origin of media. CAI easily fits into our world view.

Not only will this help disprove misleading information, these tools can also help verify atrocities being committed. There are even apps one can include on a mobile phone which can be used to prove the provenance of any image taken by that device (and any subsequent manipulation).

An example (how to get started)

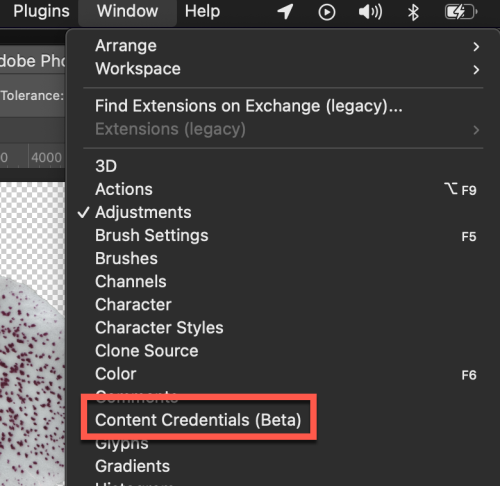

If you are using tools like Photoshop 2023 or Lightroom 2023, new capabilities exist to help with content. For our purposes, let’s use Photoshop. First, one needs to enable this beta capability. From the main menu, select Window > Content Credentials (Beta).

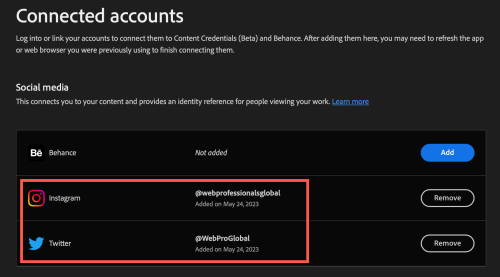

You then enable content credentials and link to your social media accounts. In this case, I have linked both our Twitter feed and Instagram feed. This provides and identifies references for individuals viewing your work.

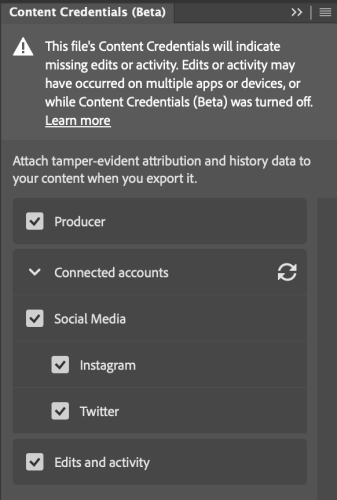

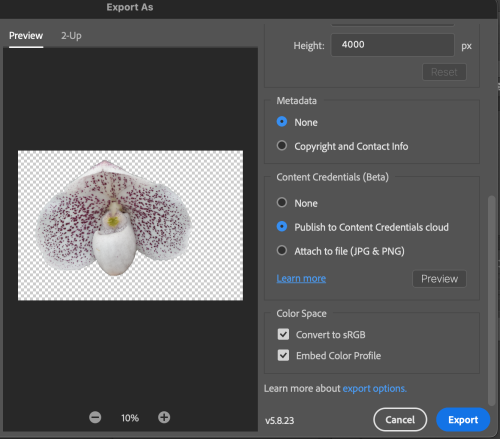

One can also choose to publish your content credentials to the cloud. The alternative is to attach them to your files (which would increase the file size of each file). When you refresh the Content Credentials panel, one would see the linked accounts in Photoshop.

When you work with images, you can then preserve the content credentials as part of your file saving/exporting process. In this case, I removed the background from one of my orchid photos (generated by stacking a number of images together). Before exporting, these are some of the data one can include.

Ok, content credentials are now in the meta data. Now what?

Inspecting images

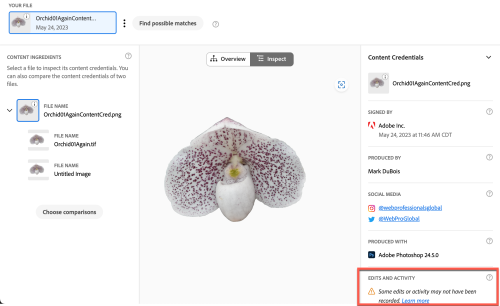

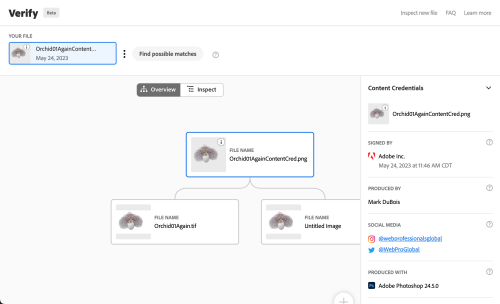

Perhaps one encounters the stacked image of an orchid online. We all know it is not possible to take a macro photo of a flower with such detail unless there has been some manipulation. One can use a tool like Verify (at the Content Authenticity Initiative site) to learn more about the image. Note that the image is being examined on your local computer (and is not being uploaded during this checking process). This is what I see when I examine the orchid image.

Note that there is recognition that there have been prior edits to this image (before the CAI was activated). However, one can also see the additional information. In addition to the inspection tab, there is an overview (for those who like to see the origins).

Of course, that was an overview of my efforts working with actual images. What about AI-generated images?

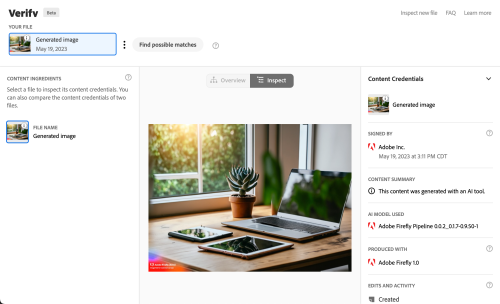

AI and C2PA

Tools (like Adobe Firefly) utilize open-source tools (C2PA in this case). If you follow the link to C2PA, there is an introduction video. Without going into all the weeds, here is what one sees when testing the content authenticity of a Firefly-generated image. Note, there is no visual overview as this was just machine-generated. One can clearly see the origin of the image.

Perhaps the CAI will help us better deal with the wave of misinformation being generated. Time will tell. In the interim, we encourage you to try out these open-source tools. As always, we are curious what you think. Let us know in the comments.